⚙️ AI AUTOMATION (ready to use)

Good day AI Business Transformation Community! 🦾 As we dive deeper 🤿 into the AI Transformation journey, we have decided to launch different 𝗧𝗿𝗮𝗻𝘀𝗳𝗼𝗿𝗺𝗮𝘁𝗶𝗼𝗻 𝗮𝗻𝗱 𝗦𝗼𝗹𝘂𝘁𝗶𝗼𝗻 𝗱𝗮𝘁𝗮𝗯𝗮𝘀𝗲𝘀 for you. 💪🏼 Starting today, you can access our ⚙️ 𝗔𝗜 𝗔𝗨𝗧𝗢𝗠𝗔𝗧𝗜𝗢𝗡 (𝗿𝗲𝗮𝗱𝘆 𝘁𝗼 𝘂𝘀𝗲) 𝗳𝗼𝗿 𝗙𝗥𝗘𝗘! 🔥 We have created our AI automation templates for MAKE.com - where we are also an affiliate partner. IF you want to support the growth of our community, we would very much appreciate your support! Here is the Link: https://www.make.com/en/register?pc=aitransformation. We are applying for a SPECIAL PROMO, where anyone who registers for Make using our link will automatically receive 1 𝗺𝗼𝗻𝘁𝗵 𝗼𝗳 𝘁𝗵𝗲 𝗣𝗿𝗼 𝗽𝗹𝗮𝗻 𝘄𝗶𝘁𝗵 10,000 𝗼𝗽𝗲𝗿𝗮𝘁𝗶𝗼𝗻𝘀 𝗳𝗼𝗿 𝗳𝗿𝗲𝗲! (Let us know, so we can provide you with a special link for your PROMO). Access our MAKE templates here. 𝗧𝗵𝗲 𝘁𝗲𝗺𝗽𝗹𝗮𝘁𝗲𝘀 𝗮𝗿𝗲 𝗱𝗶𝘃𝗶𝗱𝗲𝗱 𝗶𝗻𝘁𝗼 𝗱𝗶𝗳𝗳𝗲𝗿𝗲𝗻𝘁 𝗰𝗮𝘁𝗲𝗴𝗼𝗿𝗶𝗲𝘀: ✍🏻 Content Creation 📲 Social Media Management 🎯 Lead Management & Marketing Campaigns 💸 Sales & Client Management 𝗛𝗮𝗽𝗽𝘆 𝗔𝗜 𝘁𝗿𝗮𝗻𝘀𝗶𝘁𝗶𝗼𝗻𝗶𝗻𝗴! 🦾

Complete action

7

5

New comment 9d ago

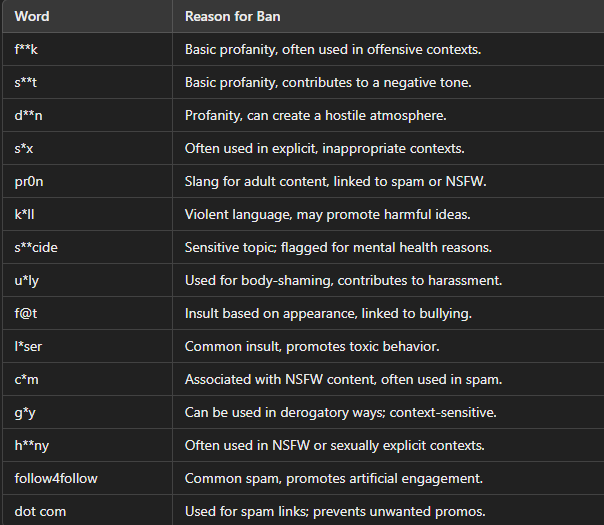

Creating lists of forbidden keywords for chat or commentaries with AI

In online communities, maintaining a positive and respectful environment is crucial, especially in spaces like Twitch chat, YouTube comments, or social media threads. A keyword filter is a great way to help achieve this, and with the assistance of AI, it’s easier to set up a customized list that captures a wide variety of inappropriate language, from offensive slurs to spam phrases. If you ask ChatGPT for example for such a list, it will not give you a clear answer. Instead it will censor itself, see the attached screenshot. Another important aspect to consider is that some words, like “gay,” can have multiple uses. While such terms may appear in an insulting context, they are also essential for normal, positive communication. For example, if someone wishes to come out as gay, an overly restrictive AI filter might block that message in error. Multilingual contexts present a further challenge. AI systems trained primarily on English data can misinterpret words in other languages as English curse words. A simple example is the Luxembourgish sentence, "Dat ass richteg flott!" which means “This is really nice!” in English. Here, AI could mistakenly censor the word "ass" (which means "is" in Luxembourgish) as the English term "ass." This brings us to an important question: do the benefits of an automated filter outweigh these limitations? And, what countermeasures can we implement to prevent an overly restrictive AI filter?

7

1

New comment 14d ago

Classroom: 🦾 AI AGENTS

This post connects the 🦾 AI AGENTS course with our community. 🚀 Whenever you have 𝘀𝗽𝗲𝗰𝗶𝗳𝗶𝗰 𝗾𝘂𝗲𝘀𝘁𝗶𝗼𝗻𝘀 𝗮𝗯𝗼𝘂𝘁 𝗰𝗼𝗻𝗰𝗲𝗽𝘁𝘀, 𝗲𝘅𝗽𝗹𝗮𝗻𝗮𝘁𝗶𝗼𝗻𝘀, 𝗼𝗿 𝗺𝗮𝘁𝗲𝗿𝗶𝗮𝗹 inside the course, feel free to use this post to bring those topics to the community and discuss them 💪🏼.

Complete action

11

3

New comment Oct 11

Classroom: ⚙️ AI AUTOMATION

This post connects the ⚙️ AI AUTOMATION course with our community. 🚀 Whenever you have 𝘀𝗽𝗲𝗰𝗶𝗳𝗶𝗰 𝗾𝘂𝗲𝘀𝘁𝗶𝗼𝗻𝘀 𝗮𝗯𝗼𝘂𝘁 𝗰𝗼𝗻𝗰𝗲𝗽𝘁𝘀, 𝗲𝘅𝗽𝗹𝗮𝗻𝗮𝘁𝗶𝗼𝗻𝘀, 𝗼𝗿 𝗺𝗮𝘁𝗲𝗿𝗶𝗮𝗹 inside the course, feel free to use this post to bring those topics to the community and discuss them 💪🏼.

Complete action

10

1

New comment Oct 11

Classroom: 🎯 AI CONTENT CURATION

This post connects the 🎯 AI CONTENT CURATION course with our community. 🚀 Whenever you have 𝘀𝗽𝗲𝗰𝗶𝗳𝗶𝗰 𝗾𝘂𝗲𝘀𝘁𝗶𝗼𝗻𝘀 𝗮𝗯𝗼𝘂𝘁 𝗰𝗼𝗻𝗰𝗲𝗽𝘁𝘀, 𝗲𝘅𝗽𝗹𝗮𝗻𝗮𝘁𝗶𝗼𝗻𝘀, 𝗼𝗿 𝗺𝗮𝘁𝗲𝗿𝗶𝗮𝗹 inside the course, feel free to use this post to bring those topics to the community and discuss them 💪🏼.

Complete action

10

1

New comment Oct 11

1-6 of 6

skool.com/netregie-ai

𝗧𝗿𝗮𝗻𝘀𝗳𝗼𝗿𝗺 𝘆𝗼𝘂𝗿 𝗖𝗼𝗺𝗽𝗮𝗻𝘆 🦾 Unlock #𝗔𝗜 for your 𝗯𝘂𝘀𝗶𝗻𝗲𝘀𝘀! 🤖 𝗦𝗧𝗔𝗥𝗧 𝗬𝗢𝗨𝗥 𝗝𝗢𝗨𝗥𝗡𝗘𝗬 𝗡𝗢𝗪 ⤵️

powered by